SPRADH0 August 2024 AM625 , AM6442 , AM69 , TDA4VM

- 1

- Abstract

- Trademarks

- 1Introduction

- 2Evaluation Platform and Methods

- 3Performance Metrics

- 4Optimizations

- 5Summary

- 6References

- 7Appendix A: How to Setup TI Embedded Processors as EtherCAT Controller Using the CODESYS Stack

- 8Appendix B: How to Enable Unlimited Runtime on CODESYS Stack

4.1 Implemented Optimizations

The AM62x and AM64x EtherCAT controllers are tuned for better performance by disabling several background processes and tuning CODESYS specific processes running in Linux on the ARM64 A53 cores. The main background processes that are disabled from the real-time “tisdk-default-image” Software Development Kit (SDK) version 09.01.00.08 image for AM62x are ti-apps-launcher, pulseaudio, systemd-timesyncd, telnetd, weston, containerd. These background processes are originally activated to showcase features irrelevant to an EtherCAT application. The purpose of disabling these processes is to reduce some of the CPU load on the four A53 cores and to configure the image as close to the real-time “tisdk-thinlinux-image” for AM62x. This is done because there is a slightly better cycle time performance from an average of about 350µs to about 250µs cycle time when comparing the default image to the thin image.

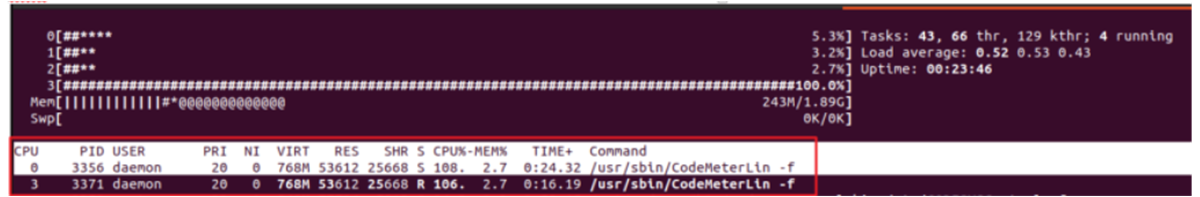

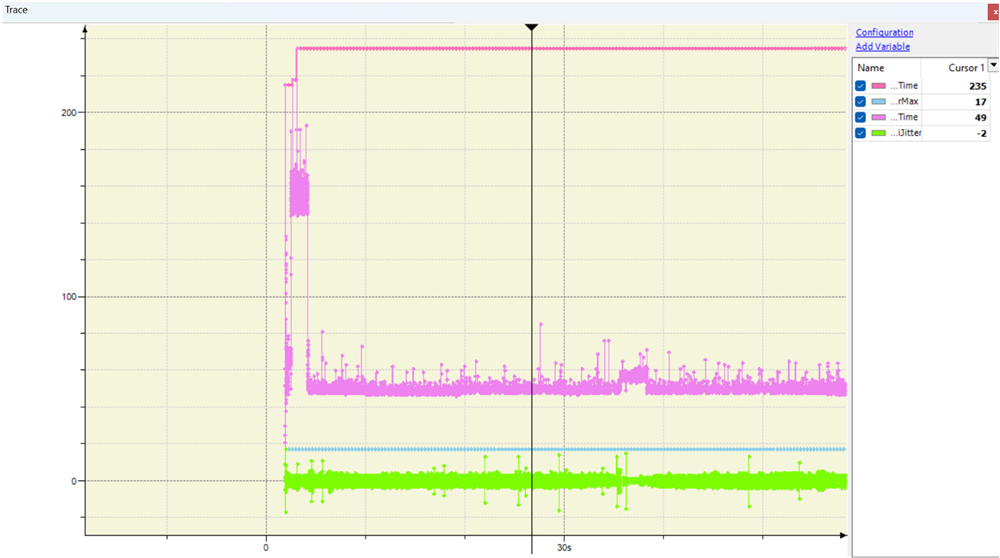

A major influence on cycle time is the CODESYS licensing application named Codemeter. Most EtherCAT applications that plan to use CODESYS require the use of running Codemeter to enable indefinite runtimes. Without the licensing application, CODESYS only runs for about 30 minutes before the system is terminated and must be restarted (see also, CODESYS Control Standard S). Codemeter is a necessary application to benchmark in an environment close to a real EtherCAT use-case; however, approximately every 5-6 minutes, the application spikes to near 100% CPU load which subsequently causes a spike in cycle time (from around 350µs to around 1000µs) and jitter. This spike can be observed through monitoring htop in Linux and the cycle time and jitter over time in the CODESYS Development System GUI.

Figure 4-1 Codemeter CPU Spike in htop

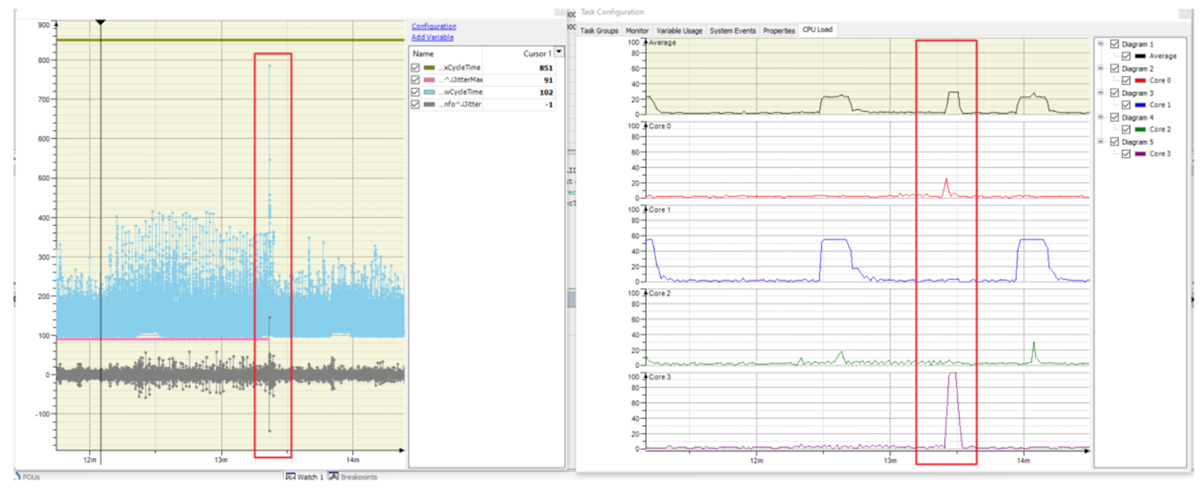

Figure 4-1 Codemeter CPU Spike in htop Figure 4-2 Spike in Maximum Cycle Time Due to Codemeter CPU Load Spike

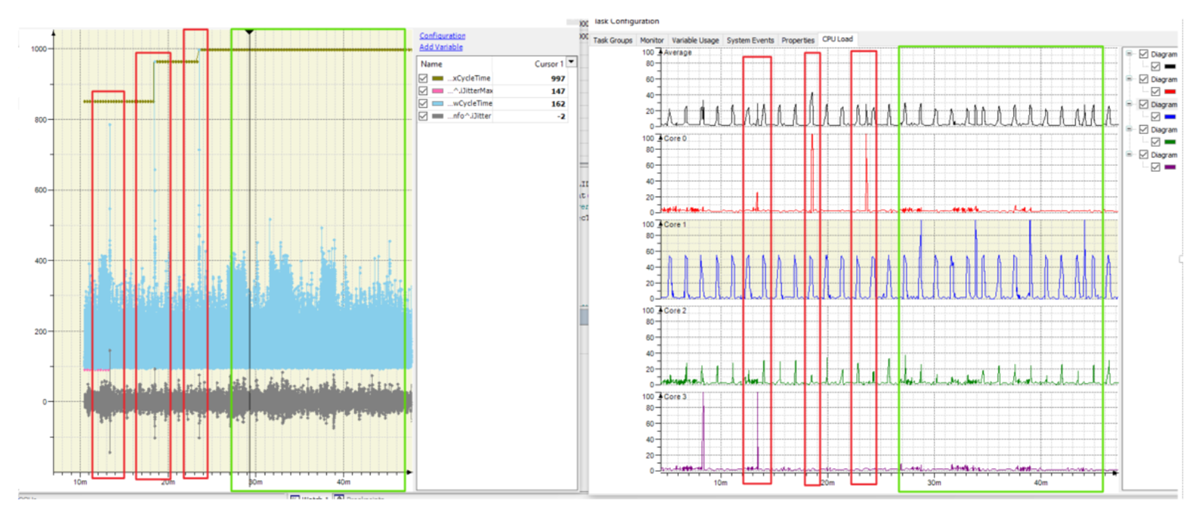

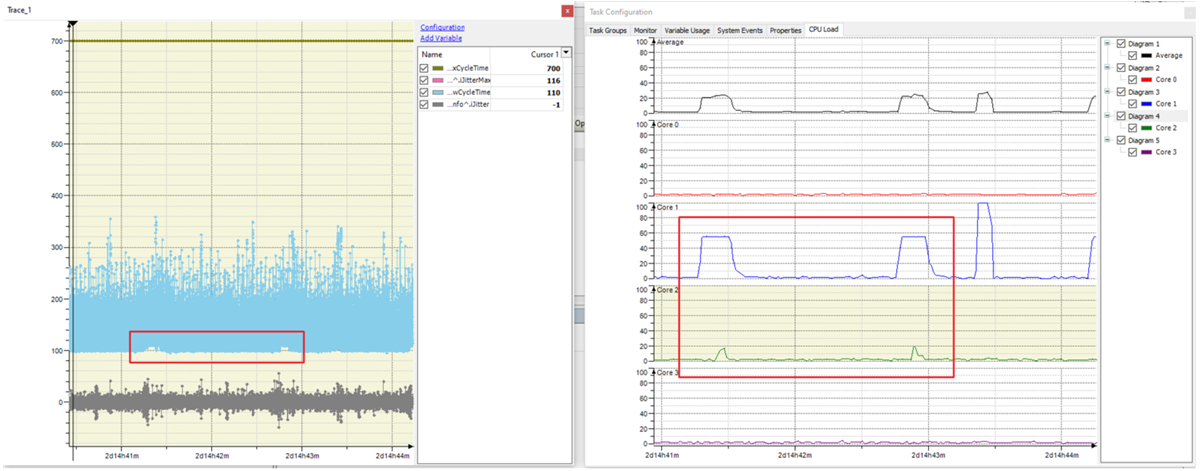

Figure 4-2 Spike in Maximum Cycle Time Due to Codemeter CPU Load SpikeUpon further investigation, the CPU affinity of Codemeter appears to change between CPU 0, 2, and 3 by default and never uses CPU 1. CPU 1 is the core that the CODESYS control application runs on by default. By changing the CPU affinity to CPU 1, the massive cycle time spike to around 1000µs reduces to around 500µs. The general spikes above 400µs in Figure 4-3 are due to running htop during those periods of time. The details on why CPU affinity changes reduce the spike is still unclear.

Figure 4-3 Codemeter Application on CPU 1 and Resulting KPI in Green Boxes and on CPU0 or 3 in Red Boxes

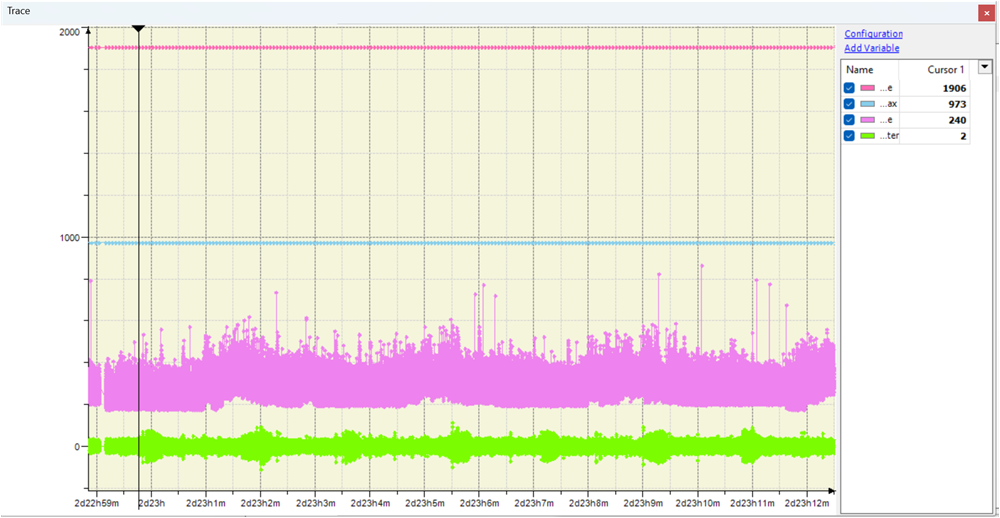

Figure 4-3 Codemeter Application on CPU 1 and Resulting KPI in Green Boxes and on CPU0 or 3 in Red BoxesAs an additional note, the maximum cycle time for all hardware platforms reported in Table 3-1 is an outlier related to the period of approximately 3 seconds during which the EtherCAT protocol starts up. This cycle time outlier includes the time when the devices cycles through several states from “Init” state to “Operational” state, based on the EtherCAT State Machine (ESM) and startup configuration is sent from the EtherCAT controller to the EtherCAT devices through acyclic mailbox protocols (see also, EtherCAT | System Description). Due to this, the focus on performance is when the device is in the steady state. A similar cycle time spike can also occur when logging out and back in through the CODESYS Development System interface. This use case is excluded in the analysis under the assumption that in a real factory automation environment, constant logout and login is not likely to occur.

The “Filtered Max Cycle Time” and “Filtered Max Jitter” columns in Table 3-1 refer to the approximate maximum cycle time after filtering out the startup outlier. These filtered values are based on the last 2 hours, 46 minutes, and 39 seconds of runtime, as the CODESYS Development System only has a buffer size large enough to store data points for this period of time.

Figure 4-4 KPI During Startup on AM62x as EtherCAT Controller

Figure 4-4 KPI During Startup on AM62x as EtherCAT Controller Figure 4-5 KPI During Startup on AM69 as EtherCAT Controller

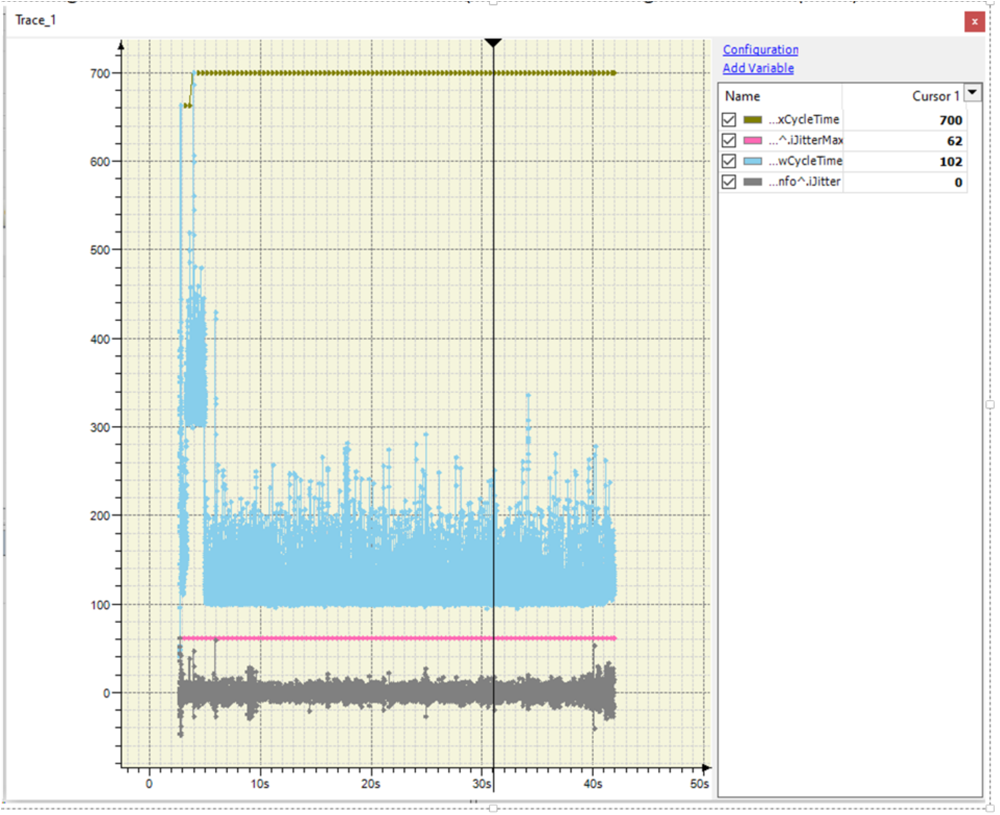

Figure 4-5 KPI During Startup on AM69 as EtherCAT ControllerA snapshot of the final results of these optimizations for AM62x can be seen in Figure 4-6, where the maximum cycle time is the outlier of 700µs and the filtered maximum cycle time can be estimated to be around 500µs. There are still a number of periodic cycle time increases observed which have yet to be investigated.

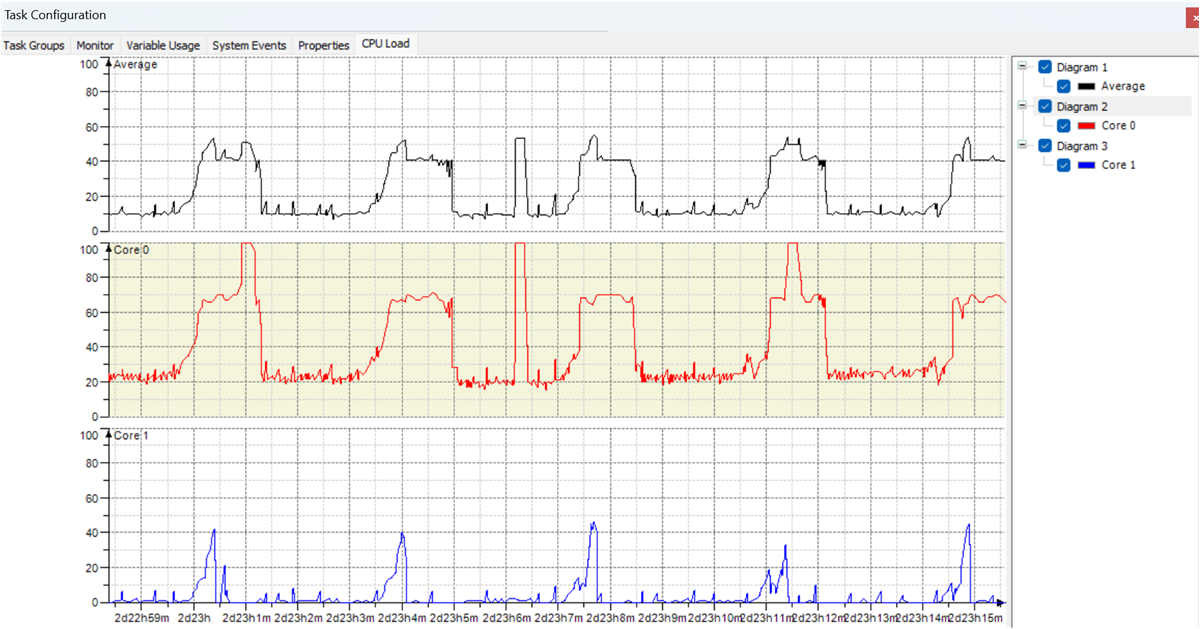

In general, the AM64x has lower performance than the AM62x, which is in part due to the reduction from 4 A53 cores to 2 A53 cores, resulting in less cores for various tasks required by EtherCAT and other background tasks to run. This difference in cores can impact the CPU load, resulting in the overall CPU load across all cores to be higher for AM64x than that of AM62x. Differences in cache size, DDR speed, and bus width can also play a factor in the performance differences between AM64x and AM62x. Additionally observed on the AM64x platform, during the 63-hour runtime, spikes in cyclic time ranging between 542µs to about 1032µs sporadically appear. The specific causes for this behavior on AM64x are still under investigation.

Figure 4-7 KPI on AM64x as EtherCAT Controller as Shown on CODESYS Development System

Figure 4-7 KPI on AM64x as EtherCAT Controller as Shown on CODESYS Development System Figure 4-8 CPU Load of AM64x as EtherCAT Controller as Shown in CODESYS Development System

Figure 4-8 CPU Load of AM64x as EtherCAT Controller as Shown in CODESYS Development System