SSZTC89 august 2015

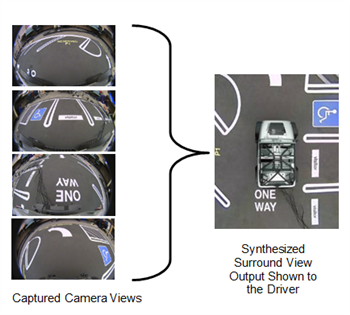

When driving cars in computer games, we can typically get a view of the car and its surroundings as if we are looking at the car from the top or from the back. These views help us better drive the car by showing us what surrounds us. Unfortunately, we do not have the same views when driving in real life, but this is changing nowadays with the introduction of automotive surround view systems (also called “birds eye view” or “around view”). Surround view is an advanced driver assistance systems (ADAS) technology that shows to the driver a birds-eye 360-degree camera view of the car and its surroundings in real-time in order to enable safer driving at low speeds such as parking. Since there is really no actual camera in real life looking at the car from the top, the birds-eye view that is shown to the driver is in fact a virtual view that is synthesized from a composite of 4 to 6 fish-eye cameras that are installed around the car as shown in the example below.

As surround view systems are incorporated into more cars, we are observing several key trends. One is the trend towards higher visual quality. Early surround view systems were using low-resolution cameras and did not attempt to apply seamless stitching. Instead they put black bars in places where the different camera views border each other. More advanced systems seamlessly stitch the views to make the birds-eye view more realistic. An example is shown above from TI’s TDA2x based surround view system that includes seamless stitching. Achieving seamless stitching requires advanced geometric and photometric alignment algorithms that TI’s Digital Signal Processors (DSPs) can implement efficiently. (Read here for more information) Visual quality can be further improved by applying Wide Dynamic Range (WDR) imaging algorithms in the surround view system. Automotive use cases include many situations where there is significant lighting difference on different sides of the car, such as when a car is coming out of a parking garage. In these situations, WDR imaging can help create a surround view output where both dark and bright regions are clearly visible. Variants of TI’s TDA3x processor has WDR imaging capabilities, which can be applied to surround view systems.

Another potential improvement in visual quality is video noise filtering. When surround view systems are used at night time, the camera output will typically contain high levels of noise due to low light. Advanced video noise filtering algorithms can be applied to reduce the level of noise and improve surround view quality. The TDA3x processor provides high quality video noise filters.

Surround view systems typically include only the birds-eye camera view looking at the car from the top. One interesting additional capability is to enable the camera view point to dynamically change so that the driver can look at the car from various directions and choose the direction that they prefer most. This is accomplished with the help of GPU-based rendering. The TDA2x System-on-Chip (SoC) has an SGX544 graphics engine that can be used to implement 3D surround view with dynamic free camera view point.

There are other areas of improvement for advanced surround view systems, such as refining the rendering of objects that are in the close vicinity of the car. Surround view systems currently stretch these objects while rendering them. Sometimes when these objects are close to stitching boundaries, ghosting artifacts are created. More advanced algorithms will reduce these distortions in the future and make the output video look more realistic.

In addition to the visual quality improvement trends discussed above, there is yet another set of interesting developments where intelligent algorithms are implemented on top of surround view to detect certain events and alert the driver in case of an emergency. Pedestrians and other objects around the car can lead to collusions and are important for the driver to be aware of. Vision algorithms that are analyzing the surround view video can detect these objects and overlay warnings on the surround view display to focus the attention of the driver. These algorithms could also fuse additional sensors (such as ultrasonic) with the surround view camera system to achieve higher robustness. In the future, we can expect surround view systems to even achieve autonomous driving at low speeds.