SPRUJ53B April 2024 – September 2024 TMS320F28P550SJ , TMS320F28P559SJ-Q1

- 1

- Read This First

- 1 C2000™ Microcontrollers Software Support

- 2 C28x Processor

-

3 System Control and

Interrupts

- 3.1 Introduction

- 3.2 Power Management

- 3.3 Device Identification and Configuration Registers

- 3.4

Resets

- 3.4.1 Reset Sources

- 3.4.2 External Reset (XRS)

- 3.4.3 Simulate External Reset (SIMRESET.XRS)

- 3.4.4 Power-On Reset (POR)

- 3.4.5 Brown-Out Reset (BOR)

- 3.4.6 Debugger Reset (SYSRS)

- 3.4.7 Simulate CPU Reset (SIMRESET)

- 3.4.8 Watchdog Reset (WDRS)

- 3.4.9 NMI Watchdog Reset (NMIWDRS)

- 3.4.10 DCSM Safe Code Copy Reset (SCCRESET)

- 3.5 Peripheral Interrupts

- 3.6 Exceptions and Non-Maskable Interrupts

- 3.7

Clocking

- 3.7.1 Clock Sources

- 3.7.2 Derived Clocks

- 3.7.3 Device Clock Domains

- 3.7.4 XCLKOUT

- 3.7.5 Clock Connectivity

- 3.7.6 Clock Source and PLL Setup

- 3.7.7 Using an External Crystal or Resonator

- 3.7.8 Using an External Oscillator

- 3.7.9 Choosing PLL Settings

- 3.7.10 System Clock Setup

- 3.7.11 SYS PLL Bypass

- 3.7.12 Clock (OSCCLK) Failure Detection

- 3.8 32-Bit CPU Timers 0/1/2

- 3.9 Watchdog Timer

- 3.10 Low-Power Modes

- 3.11

Memory Controller Module

- 3.11.1

Functional Description

- 3.11.1.1 Dedicated RAM (Mx RAM)

- 3.11.1.2 Local Shared RAM (LSx RAM)

- 3.11.1.3 Global Shared RAM (GSx RAM)

- 3.11.1.4 CAN Message RAM

- 3.11.1.5 CLA-CPU Message RAM

- 3.11.1.6 CLA-DMA Message RAM

- 3.11.1.7 Access Arbitration

- 3.11.1.8 Access Protection

- 3.11.1.9 Memory Error Detection, Correction, and Error Handling

- 3.11.1.10 Application Test Hooks for Error Detection and Correction

- 3.11.1.11 RAM Initialization

- 3.11.1

Functional Description

- 3.12 JTAG

- 3.13 Live Firmware Update

- 3.14 System Control Register Configuration Restrictions

- 3.15

Software

- 3.15.1 SYSCTL Registers to Driverlib Functions

- 3.15.2 CPUTIMER Registers to Driverlib Functions

- 3.15.3 MEMCFG Registers to Driverlib Functions

- 3.15.4 PIE Registers to Driverlib Functions

- 3.15.5 NMI Registers to Driverlib Functions

- 3.15.6 XINT Registers to Driverlib Functions

- 3.15.7 WWD Registers to Driverlib Functions

- 3.15.8 SYSCTL Examples

- 3.15.9 TIMER Examples

- 3.15.10 MEMCFG Examples

- 3.15.11 INTERRUPT Examples

- 3.15.12

LPM Examples

- 3.15.12.1 Low Power Modes: Device Idle Mode and Wakeup using GPIO

- 3.15.12.2 Low Power Modes: Device Idle Mode and Wakeup using Watchdog

- 3.15.12.3 Low Power Modes: Device Standby Mode and Wakeup using GPIO

- 3.15.12.4 Low Power Modes: Device Standby Mode and Wakeup using Watchdog

- 3.15.12.5 Low Power Modes: Halt Mode and Wakeup using GPIO

- 3.15.12.6 Low Power Modes: Halt Mode and Wakeup

- 3.15.13 WATCHDOG Examples

- 3.16

SYSCTRL Registers

- 3.16.1 SYSCTRL Base Address Table

- 3.16.2 CPUTIMER_REGS Registers

- 3.16.3 PIE_CTRL_REGS Registers

- 3.16.4 NMI_INTRUPT_REGS Registers

- 3.16.5 XINT_REGS Registers

- 3.16.6 SYNC_SOC_REGS Registers

- 3.16.7 DMA_CLA_SRC_SEL_REGS Registers

- 3.16.8 LFU_REGS Registers

- 3.16.9 DEV_CFG_REGS Registers

- 3.16.10 CLK_CFG_REGS Registers

- 3.16.11 CPU_SYS_REGS Registers

- 3.16.12 SYS_STATUS_REGS Registers

- 3.16.13 PERIPH_AC_REGS Registers

- 3.16.14 MEM_CFG_REGS Registers

- 3.16.15 ACCESS_PROTECTION_REGS Registers

- 3.16.16 MEMORY_ERROR_REGS Registers

- 3.16.17 TEST_ERROR_REGS Registers

- 3.16.18 UID_REGS Registers

-

4 ROM Code and Peripheral Booting

- 4.1 Introduction

- 4.2 Device Boot Sequence

- 4.3 Device Boot Modes

- 4.4 Device Boot Configurations

- 4.5 Device Boot Flow Diagrams

- 4.6 Device Reset and Exception Handling

- 4.7

Boot ROM Description

- 4.7.1 Boot ROM Configuration Registers

- 4.7.2 Entry Points

- 4.7.3 Wait Points

- 4.7.4 Secure Flash Boot

- 4.7.5 Firmware Update (FWU) Flash Boot

- 4.7.6 Memory Maps

- 4.7.7 ROM Tables

- 4.7.8 Boot Modes and Loaders

- 4.7.9 GPIO Assignments

- 4.7.10 Secure ROM Function APIs

- 4.7.11 Clock Initializations

- 4.7.12 Boot Status Information

- 4.7.13 ROM Version

- 4.8 Application Notes for Using the Bootloaders

- 4.9 Software

-

5 Dual Code Security Module (DCSM)

- 5.1 Introduction

- 5.2 Functional Description

- 5.3 Flash and OTP Erase/Program

- 5.4 Secure Copy Code

- 5.5 SecureCRC

- 5.6 CSM Impact on Other On-Chip Resources

- 5.7

Incorporating Code Security in User Applications

- 5.7.1 Environments That Require Security Unlocking

- 5.7.2 CSM Password Match Flow

- 5.7.3 C Code Example to Unsecure C28x Zone1

- 5.7.4 C Code Example to Resecure C28x Zone1

- 5.7.5 Environments That Require ECSL Unlocking

- 5.7.6 ECSL Password Match Flow

- 5.7.7 ECSL Disable Considerations for any Zone

- 5.7.8 Device Unique ID

- 5.8 Software

- 5.9 DCSM Registers

-

6 Flash Module

- 6.1 Introduction to Flash and OTP Memory

- 6.2 Flash Bank, OTP, and Pump

- 6.3 Flash Wrapper

- 6.4 Flash and OTP Memory Performance

- 6.5 Flash Read Interface

- 6.6 Flash Erase and Program

- 6.7 Error Correction Code (ECC) Protection

- 6.8 Reserved Locations Within Flash and OTP

- 6.9 Migrating an Application from RAM to Flash

- 6.10 Procedure to Change the Flash Control Registers

- 6.11 Software

- 6.12 FLASH Registers

-

7 Control Law Accelerator (CLA)

- 7.1 Introduction

- 7.2 CLA Interface

- 7.3 CLA, DMA, and CPU Arbitration

- 7.4 CLA Configuration and Debug

- 7.5 Pipeline

- 7.6

Software

- 7.6.1 CLA Registers to Driverlib Functions

- 7.6.2

CLA Examples

- 7.6.2.1 CLA arcsine(x) using a lookup table (cla_asin_cpu01)

- 7.6.2.2 CLA arcsine(x) using a lookup table (cla_asin_cpu01)

- 7.6.2.3 CLA arctangent(x) using a lookup table (cla_atan_cpu01)

- 7.6.2.4 CLA background nesting task

- 7.6.2.5 Controlling PWM output using CLA

- 7.6.2.6 Just-in-time ADC sampling with CLA

- 7.6.2.7 Optimal offloading of control algorithms to CLA

- 7.6.2.8 Handling shared resources across C28x and CLA

- 7.7

Instruction Set

- 7.7.1 Instruction Descriptions

- 7.7.2 Addressing Modes and Encoding

- 7.7.3

Instructions

- MABSF32 MRa, MRb

- MADD32 MRa, MRb, MRc

- MADDF32 MRa, #16FHi, MRb

- MADDF32 MRa, MRb, #16FHi

- MADDF32 MRa, MRb, MRc

- MADDF32 MRd, MRe, MRf||MMOV32 mem32, MRa

- MADDF32 MRd, MRe, MRf ||MMOV32 MRa, mem32

- MAND32 MRa, MRb, MRc

- MASR32 MRa, #SHIFT

- MBCNDD 16BitDest [, CNDF]

- MCCNDD 16BitDest [, CNDF]

- MCMP32 MRa, MRb

- MCMPF32 MRa, MRb

- MCMPF32 MRa, #16FHi

- MDEBUGSTOP

- MEALLOW

- MEDIS

- MEINVF32 MRa, MRb

- MEISQRTF32 MRa, MRb

- MF32TOI16 MRa, MRb

- MF32TOI16R MRa, MRb

- MF32TOI32 MRa, MRb

- MF32TOUI16 MRa, MRb

- MF32TOUI16R MRa, MRb

- MF32TOUI32 MRa, MRb

- MFRACF32 MRa, MRb

- MI16TOF32 MRa, MRb

- MI16TOF32 MRa, mem16

- MI32TOF32 MRa, mem32

- MI32TOF32 MRa, MRb

- MLSL32 MRa, #SHIFT

- MLSR32 MRa, #SHIFT

- MMACF32 MR3, MR2, MRd, MRe, MRf ||MMOV32 MRa, mem32

- MMAXF32 MRa, MRb

- MMAXF32 MRa, #16FHi

- MMINF32 MRa, MRb

- MMINF32 MRa, #16FHi

- MMOV16 MARx, MRa, #16I

- MMOV16 MARx, mem16

- MMOV16 mem16, MARx

- MMOV16 mem16, MRa

- MMOV32 mem32, MRa

- MMOV32 mem32, MSTF

- MMOV32 MRa, mem32 [, CNDF]

- MMOV32 MRa, MRb [, CNDF]

- MMOV32 MSTF, mem32

- MMOVD32 MRa, mem32

- MMOVF32 MRa, #32F

- MMOVI16 MARx, #16I

- MMOVI32 MRa, #32FHex

- MMOVIZ MRa, #16FHi

- MMOVZ16 MRa, mem16

- MMOVXI MRa, #16FLoHex

- MMPYF32 MRa, MRb, MRc

- MMPYF32 MRa, #16FHi, MRb

- MMPYF32 MRa, MRb, #16FHi

- MMPYF32 MRa, MRb, MRc||MADDF32 MRd, MRe, MRf

- MMPYF32 MRd, MRe, MRf ||MMOV32 MRa, mem32

- MMPYF32 MRd, MRe, MRf ||MMOV32 mem32, MRa

- MMPYF32 MRa, MRb, MRc ||MSUBF32 MRd, MRe, MRf

- MNEGF32 MRa, MRb[, CNDF]

- MNOP

- MOR32 MRa, MRb, MRc

- MRCNDD [CNDF]

- MSETFLG FLAG, VALUE

- MSTOP

- MSUB32 MRa, MRb, MRc

- MSUBF32 MRa, MRb, MRc

- MSUBF32 MRa, #16FHi, MRb

- MSUBF32 MRd, MRe, MRf ||MMOV32 MRa, mem32

- MSUBF32 MRd, MRe, MRf ||MMOV32 mem32, MRa

- MSWAPF MRa, MRb [, CNDF]

- MTESTTF CNDF

- MUI16TOF32 MRa, mem16

- MUI16TOF32 MRa, MRb

- MUI32TOF32 MRa, mem32

- MUI32TOF32 MRa, MRb

- MXOR32 MRa, MRb, MRc

- 7.8 CLA Registers

- 8 Neural-network Processing Unit (NPU)

- 9 Dual-Clock Comparator (DCC)

-

10General-Purpose Input/Output (GPIO)

- 10.1 Introduction

- 10.2 Configuration Overview

- 10.3 Digital Inputs on ADC Pins (AIOs)

- 10.4 Digital Inputs and Outputs on ADC Pins (AGPIOs)

- 10.5 Digital General-Purpose I/O Control

- 10.6 Input Qualification

- 10.7 USB Signals

- 10.8 PMBUS and I2C Signals

- 10.9 GPIO and Peripheral Muxing

- 10.10 Internal Pullup Configuration Requirements

- 10.11 Software

- 10.12 GPIO Registers

- 11Crossbar (X-BAR)

- 12Direct Memory Access (DMA)

-

13Embedded Real-time Analysis and Diagnostic (ERAD)

- 13.1 Introduction

- 13.2 Enhanced Bus Comparator Unit

- 13.3 System Event Counter Unit

- 13.4 ERAD Ownership, Initialization and Reset

- 13.5 ERAD Programming Sequence

- 13.6 Cyclic Redundancy Check Unit

- 13.7 Program Counter Trace

- 13.8

Software

- 13.8.1 ERAD Registers to Driverlib Functions

- 13.8.2

ERAD Examples

- 13.8.2.1 ERAD Profiling Interrupts

- 13.8.2.2 ERAD Profile Function

- 13.8.2.3 ERAD Profile Function

- 13.8.2.4 ERAD HWBP Monitor Program Counter

- 13.8.2.5 ERAD HWBP Monitor Program Counter

- 13.8.2.6 ERAD Profile Function

- 13.8.2.7 ERAD HWBP Stack Overflow Detection

- 13.8.2.8 ERAD HWBP Stack Overflow Detection

- 13.8.2.9 ERAD Stack Overflow

- 13.8.2.10 ERAD Profile Interrupts CLA

- 13.8.2.11 ERAD Profiling Interrupts

- 13.8.2.12 ERAD Profiling Interrupts

- 13.8.2.13 ERAD MEMORY ACCESS RESTRICT

- 13.8.2.14 ERAD INTERRUPT ORDER

- 13.8.2.15 ERAD AND CLB

- 13.8.2.16 ERAD PWM PROTECTION

- 13.9 ERAD Registers

- 14Analog Subsystem

-

15Analog-to-Digital Converter (ADC)

- 15.1 Introduction

- 15.2 ADC Configurability

- 15.3 SOC Principle of Operation

- 15.4 SOC Configuration Examples

- 15.5 ADC Conversion Priority

- 15.6 Burst Mode

- 15.7 EOC and Interrupt Operation

- 15.8 Post-Processing Blocks

- 15.9 Opens/Shorts Detection Circuit (OSDETECT)

- 15.10 Power-Up Sequence

- 15.11 ADC Calibration

- 15.12 ADC Timings

- 15.13

Additional Information

- 15.13.1 Ensuring Synchronous Operation

- 15.13.2 Choosing an Acquisition Window Duration

- 15.13.3 Achieving Simultaneous Sampling

- 15.13.4 Result Register Mapping

- 15.13.5 Internal Temperature Sensor

- 15.13.6 Designing an External Reference Circuit

- 15.13.7 ADC-DAC Loopback Testing

- 15.13.8 Internal Test Mode

- 15.13.9 ADC Gain and Offset Calibration

- 15.14

Software

- 15.14.1 ADC Registers to Driverlib Functions

- 15.14.2

ADC Examples

- 15.14.2.1 ADC Software Triggering

- 15.14.2.2 ADC ePWM Triggering

- 15.14.2.3 ADC Temperature Sensor Conversion

- 15.14.2.4 ADC Synchronous SOC Software Force (adc_soc_software_sync)

- 15.14.2.5 ADC Continuous Triggering (adc_soc_continuous)

- 15.14.2.6 ADC Continuous Conversions Read by DMA (adc_soc_continuous_dma)

- 15.14.2.7 ADC PPB Offset (adc_ppb_offset)

- 15.14.2.8 ADC PPB Limits (adc_ppb_limits)

- 15.14.2.9 ADC PPB Delay Capture (adc_ppb_delay)

- 15.14.2.10 ADC ePWM Triggering Multiple SOC

- 15.14.2.11 ADC Burst Mode

- 15.14.2.12 ADC Burst Mode Oversampling

- 15.14.2.13 ADC SOC Oversampling

- 15.14.2.14 ADC PPB PWM trip (adc_ppb_pwm_trip)

- 15.14.2.15 ADC Trigger Repeater Oversampling

- 15.14.2.16 ADC Trigger Repeater Undersampling

- 15.15 ADC Registers

- 16Buffered Digital-to-Analog Converter (DAC)

- 17Comparator Subsystem (CMPSS)

-

18Programmable Gain Amplifier (PGA)

- 18.1 Programmable Gain Amplifier (PGA) Overview

- 18.2 Linear Output Range

- 18.3 Gain Values

- 18.4 Modes of Operation

- 18.5 External Filtering

- 18.6 Error Calibration

- 18.7 Chopping Feature

- 18.8 Enabling and Disabling the PGA Clock

- 18.9 Lock Register

- 18.10 Analog Front-End Integration

- 18.11 Examples

- 18.12 Software

- 18.13 PGA Registers

-

19Enhanced Pulse Width Modulator (ePWM)

- 19.1 Introduction

- 19.2 Configuring Device Pins

- 19.3 ePWM Modules Overview

- 19.4

Time-Base (TB) Submodule

- 19.4.1 Purpose of the Time-Base Submodule

- 19.4.2 Controlling and Monitoring the Time-Base Submodule

- 19.4.3 Calculating PWM Period and Frequency

- 19.4.4 Phase Locking the Time-Base Clocks of Multiple ePWM Modules

- 19.4.5 Simultaneous Writes to TBPRD and CMPx Registers Between ePWM Modules

- 19.4.6 Time-Base Counter Modes and Timing Waveforms

- 19.4.7 Global Load

- 19.5 Counter-Compare (CC) Submodule

- 19.6 Action-Qualifier (AQ) Submodule

- 19.7 Dead-Band Generator (DB) Submodule

- 19.8 PWM Chopper (PC) Submodule

- 19.9 Trip-Zone (TZ) Submodule

- 19.10 Event-Trigger (ET) Submodule

- 19.11 Digital Compare (DC) Submodule

- 19.12 ePWM Crossbar (X-BAR)

- 19.13

Applications to Power Topologies

- 19.13.1 Overview of Multiple Modules

- 19.13.2 Key Configuration Capabilities

- 19.13.3 Controlling Multiple Buck Converters With Independent Frequencies

- 19.13.4 Controlling Multiple Buck Converters With Same Frequencies

- 19.13.5 Controlling Multiple Half H-Bridge (HHB) Converters

- 19.13.6 Controlling Dual 3-Phase Inverters for Motors (ACI and PMSM)

- 19.13.7 Practical Applications Using Phase Control Between PWM Modules

- 19.13.8 Controlling a 3-Phase Interleaved DC/DC Converter

- 19.13.9 Controlling Zero Voltage Switched Full Bridge (ZVSFB) Converter

- 19.13.10 Controlling a Peak Current Mode Controlled Buck Module

- 19.13.11 Controlling H-Bridge LLC Resonant Converter

- 19.14 Register Lock Protection

- 19.15

High-Resolution Pulse Width Modulator (HRPWM)

- 19.15.1

Operational Description of HRPWM

- 19.15.1.1 Controlling the HRPWM Capabilities

- 19.15.1.2 HRPWM Source Clock

- 19.15.1.3 Configuring the HRPWM

- 19.15.1.4 Configuring High-Resolution in Deadband Rising-Edge and Falling-Edge Delay

- 19.15.1.5 Principle of Operation

- 19.15.1.6 Deadband High-Resolution Operation

- 19.15.1.7 Scale Factor Optimizing Software (SFO)

- 19.15.1.8 HRPWM Examples Using Optimized Assembly Code

- 19.15.2 SFO Library Software - SFO_TI_Build_V8.lib

- 19.15.1

Operational Description of HRPWM

- 19.16

Software

- 19.16.1 EPWM Registers to Driverlib Functions

- 19.16.2 HRPWM Registers to Driverlib Functions

- 19.16.3

EPWM Examples

- 19.16.3.1 ePWM Trip Zone

- 19.16.3.2 ePWM Up Down Count Action Qualifier

- 19.16.3.3 ePWM Synchronization

- 19.16.3.4 ePWM Digital Compare

- 19.16.3.5 ePWM Digital Compare Event Filter Blanking Window

- 19.16.3.6 ePWM Valley Switching

- 19.16.3.7 ePWM Digital Compare Edge Filter

- 19.16.3.8 ePWM Deadband

- 19.16.3.9 ePWM DMA

- 19.16.3.10 ePWM Chopper

- 19.16.3.11 EPWM Configure Signal

- 19.16.3.12 Realization of Monoshot mode

- 19.16.3.13 EPWM Action Qualifier (epwm_up_aq)

- 19.16.4 HRPWM Examples

- 19.17 EPWM Registers

-

20Enhanced Capture (eCAP)

- 20.1 Introduction

- 20.2 Description

- 20.3 Configuring Device Pins for the eCAP

- 20.4 Capture and APWM Operating Mode

- 20.5

Capture Mode Description

- 20.5.1 Event Prescaler

- 20.5.2 Edge Polarity Select and Qualifier

- 20.5.3 Continuous/One-Shot Control

- 20.5.4 32-Bit Counter and Phase Control

- 20.5.5 CAP1-CAP4 Registers

- 20.5.6 eCAP Synchronization

- 20.5.7 Interrupt Control

- 20.5.8 DMA Interrupt

- 20.5.9 Shadow Load and Lockout Control

- 20.5.10 APWM Mode Operation

- 20.6

Application of the eCAP Module

- 20.6.1 Example 1 - Absolute Time-Stamp Operation Rising-Edge Trigger

- 20.6.2 Example 2 - Absolute Time-Stamp Operation Rising- and Falling-Edge Trigger

- 20.6.3 Example 3 - Time Difference (Delta) Operation Rising-Edge Trigger

- 20.6.4 Example 4 - Time Difference (Delta) Operation Rising- and Falling-Edge Trigger

- 20.7 Application of the APWM Mode

- 20.8 Software

- 20.9 ECAP Registers

-

21Enhanced Quadrature

Encoder Pulse (eQEP)

- 21.1 Introduction

- 21.2 Configuring Device Pins

- 21.3 Description

- 21.4 Quadrature Decoder Unit (QDU)

- 21.5 Position Counter and Control Unit (PCCU)

- 21.6 eQEP Edge Capture Unit

- 21.7 eQEP Watchdog

- 21.8 eQEP Unit Timer Base

- 21.9 QMA Module

- 21.10 eQEP Interrupt Structure

- 21.11 Software

- 21.12 EQEP Registers

-

22Serial Peripheral Interface (SPI)

- 22.1 Introduction

- 22.2 System-Level Integration

- 22.3 SPI Operation

- 22.4 Programming Procedure

- 22.5 Software

- 22.6 SPI Registers

-

23Serial Communications Interface (SCI)

- 23.1 Introduction

- 23.2 Architecture

- 23.3 SCI Module Signal Summary

- 23.4 Configuring Device Pins

- 23.5 Multiprocessor and Asynchronous Communication Modes

- 23.6 SCI Programmable Data Format

- 23.7 SCI Multiprocessor Communication

- 23.8 Idle-Line Multiprocessor Mode

- 23.9 Address-Bit Multiprocessor Mode

- 23.10 SCI Communication Format

- 23.11 SCI Port Interrupts

- 23.12 SCI Baud Rate Calculations

- 23.13 SCI Enhanced Features

- 23.14 Software

- 23.15 SCI Registers

-

24Universal Serial Bus (USB) Controller

- 24.1 Introduction

- 24.2 Functional Description

- 24.3 Initialization and Configuration

- 24.4 USB Global Interrupts

- 24.5

Software

- 24.5.1 USB Registers to Driverlib Functions

- 24.5.2

USB Examples

- 24.5.2.1 USB CDC serial example

- 24.5.2.2 USB HID Mouse Device

- 24.5.2.3 USB Device Keyboard

- 24.5.2.4 USB Generic Bulk Device

- 24.5.2.5 USB HID Mouse Host

- 24.5.2.6 USB HID Keyboard Host

- 24.5.2.7 USB Mass Storage Class Host

- 24.5.2.8 USB Dual Detect

- 24.5.2.9 USB Throughput Bulk Device Example (usb_ex9_throughput_dev_bulk)

- 24.5.2.10 USB HUB Host example

- 24.6 USB Registers

-

25Fast Serial Interface

(FSI)

- 25.1 Introduction

- 25.2 System-level Integration

- 25.3

FSI Functional Description

- 25.3.1 Introduction to Operation

- 25.3.2 FSI Transmitter Module

- 25.3.3

FSI Receiver Module

- 25.3.3.1 Initialization

- 25.3.3.2 FSI_RX Clocking

- 25.3.3.3 Receiving Frames

- 25.3.3.4 Ping Frame Watchdog

- 25.3.3.5 Frame Watchdog

- 25.3.3.6 Delay Line Control

- 25.3.3.7 Buffer Management

- 25.3.3.8 CRC Submodule

- 25.3.3.9 Using the Zero Bits of the Receiver Tag Registers

- 25.3.3.10 Conditions in Which the Receiver Must Undergo a Soft Reset

- 25.3.3.11 FSI_RX Reset

- 25.3.4 Frame Format

- 25.3.5 Flush Sequence

- 25.3.6 Internal Loopback

- 25.3.7 CRC Generation

- 25.3.8 ECC Module

- 25.3.9 Tag Matching

- 25.3.10 User Data Filtering (UDATA Matching)

- 25.3.11 TDM Configurations

- 25.3.12 FSI Trigger Generation

- 25.3.13 FSI-SPI Compatibility Mode

- 25.4 FSI Programing Guide

- 25.5

Software

- 25.5.1 FSI Registers to Driverlib Functions

- 25.5.2

FSI Examples

- 25.5.2.1 FSI Loopback:CPU Control

- 25.5.2.2 FSI DMA frame transfers:DMA Control

- 25.5.2.3 FSI data transfer by external trigger

- 25.5.2.4 FSI data transfers upon CPU Timer event

- 25.5.2.5 FSI and SPI communication(fsi_ex6_spi_main_tx)

- 25.5.2.6 FSI and SPI communication(fsi_ex7_spi_remote_rx)

- 25.5.2.7 FSI P2Point Connection:Rx Side

- 25.5.2.8 FSI P2Point Connection:Tx Side

- 25.6 FSI Registers

-

26Inter-Integrated Circuit Module (I2C)

- 26.1 Introduction

- 26.2 Configuring Device Pins

- 26.3

I2C Module Operational Details

- 26.3.1 Input and Output Voltage Levels

- 26.3.2 Selecting Pullup Resistors

- 26.3.3 Data Validity

- 26.3.4 Operating Modes

- 26.3.5 I2C Module START and STOP Conditions

- 26.3.6 Non-repeat Mode versus Repeat Mode

- 26.3.7 Serial Data Formats

- 26.3.8 Clock Synchronization

- 26.3.9 Clock Stretching

- 26.3.10 Arbitration

- 26.3.11 Digital Loopback Mode

- 26.3.12 NACK Bit Generation

- 26.4 Interrupt Requests Generated by the I2C Module

- 26.5 Resetting or Disabling the I2C Module

- 26.6

Software

- 26.6.1 I2C Registers to Driverlib Functions

- 26.6.2

I2C Examples

- 26.6.2.1 C28x-I2C Library source file for FIFO interrupts

- 26.6.2.2 C28x-I2C Library source file for FIFO interrupts

- 26.6.2.3 C28x-I2C Library source file for FIFO using polling

- 26.6.2.4 I2C Digital Loopback with FIFO Interrupts

- 26.6.2.5 I2C EEPROM

- 26.6.2.6 I2C Digital External Loopback with FIFO Interrupts

- 26.6.2.7 I2C EEPROM

- 26.6.2.8 I2C controller target communication using FIFO interrupts

- 26.6.2.9 I2C EEPROM

- 26.6.2.10 I2C Extended Clock Stretching Controller TX

- 26.6.2.11 I2C Extended Clock Stretching Target RX

- 26.7 I2C Registers

-

27Power Management Bus Module (PMBus)

- 27.1 Introduction

- 27.2 Configuring Device Pins

- 27.3

Target Mode

Operation

- 27.3.1 Configuration

- 27.3.2

Message Handling

- 27.3.2.1 Quick Command

- 27.3.2.2 Send Byte

- 27.3.2.3 Receive Byte

- 27.3.2.4 Write Byte and Write Word

- 27.3.2.5 Read Byte and Read Word

- 27.3.2.6 Process Call

- 27.3.2.7 Block Write

- 27.3.2.8 Block Read

- 27.3.2.9 Block Write-Block Read Process Call

- 27.3.2.10 Alert Response

- 27.3.2.11 Extended Command

- 27.3.2.12 Group Command

- 27.4

Controller Mode

Operation

- 27.4.1 Configuration

- 27.4.2

Message Handling

- 27.4.2.1 Quick Command

- 27.4.2.2 Send Byte

- 27.4.2.3 Receive Byte

- 27.4.2.4 Write Byte and Write Word

- 27.4.2.5 Read Byte and Read Word

- 27.4.2.6 Process Call

- 27.4.2.7 Block Write

- 27.4.2.8 Block Read

- 27.4.2.9 Block Write-Block Read Process Call

- 27.4.2.10 Alert Response

- 27.4.2.11 Extended Command

- 27.4.2.12 Group Command

- 27.5 Software

- 27.6 PMBUS Registers

-

28Modular Controller Area Network (MCAN)

- 28.1 MCAN Introduction

- 28.2 MCAN Environment

- 28.3 CAN Network Basics

- 28.4 MCAN Integration

- 28.5

MCAN Functional Description

- 28.5.1 Module Clocking Requirements

- 28.5.2 Interrupt Requests

- 28.5.3 Operating Modes

- 28.5.4 Transmitter Delay Compensation

- 28.5.5 Restricted Operation Mode

- 28.5.6 Bus Monitoring Mode

- 28.5.7 Disabled Automatic Retransmission (DAR) Mode

- 28.5.8 Clock Stop Mode

- 28.5.9 Test Modes

- 28.5.10 Timestamp Generation

- 28.5.11 Timeout Counter

- 28.5.12 Safety

- 28.5.13 Rx Handling

- 28.5.14 Tx Handling

- 28.5.15 FIFO Acknowledge Handling

- 28.5.16 Message RAM

- 28.6

Software

- 28.6.1 MCAN Registers to Driverlib Functions

- 28.6.2

MCAN Examples

- 28.6.2.1 MCAN Internal Loopback with Interrupt

- 28.6.2.2 MCAN Loopback with Interrupts Example Using SYSCONFIG Tool

- 28.6.2.3 MCAN receive using Rx Buffer

- 28.6.2.4 MCAN External Reception (with mask filter) into RX-FIFO1

- 28.6.2.5 MCAN Classic frames transmission using Tx Buffer

- 28.6.2.6 MCAN External Reception (with RANGE filter) into RX-FIFO1

- 28.6.2.7 MCAN External Transmit using Tx Buffer

- 28.6.2.8 MCAN receive using Rx Buffer

- 28.6.2.9 MCAN Internal Loopback with Interrupt

- 28.6.2.10 MCAN External Transmit using Tx Buffer

- 28.6.2.11 MCAN Internal Loopback with Interrupt

- 28.7 MCAN Registers

-

29Local Interconnect Network (LIN)

- 29.1 LIN Overview

- 29.2 Serial Communications Interface Module

- 29.3

Local Interconnect Network Module

- 29.3.1

LIN Communication Formats

- 29.3.1.1 LIN Standards

- 29.3.1.2 Message Frame

- 29.3.1.3 Synchronizer

- 29.3.1.4 Baud Rate

- 29.3.1.5 Header Generation

- 29.3.1.6 Extended Frames Handling

- 29.3.1.7 Timeout Control

- 29.3.1.8 TXRX Error Detector (TED)

- 29.3.1.9 Message Filtering and Validation

- 29.3.1.10 Receive Buffers

- 29.3.1.11 Transmit Buffers

- 29.3.2 LIN Interrupts

- 29.3.3 Servicing LIN Interrupts

- 29.3.4 LIN DMA Interface

- 29.3.5 LIN Configurations

- 29.3.1

LIN Communication Formats

- 29.4 Low-Power Mode

- 29.5 Emulation Mode

- 29.6 Software

- 29.7 LIN Registers

-

30Configurable Logic Block (CLB)

- 30.1 Introduction

- 30.2 Description

- 30.3 CLB Input/Output Connection

- 30.4 CLB Tile

- 30.5 CPU Interface

- 30.6 DMA Access

- 30.7 CLB Data Export Through SPI RX Buffer

- 30.8

Software

- 30.8.1 CLB Registers to Driverlib Functions

- 30.8.2

CLB Examples

- 30.8.2.1 CLB Empty Project

- 30.8.2.2 CLB Combinational Logic

- 30.8.2.3 CLB GPIO Input Filter

- 30.8.2.4 CLB Auxilary PWM

- 30.8.2.5 CLB PWM Protection

- 30.8.2.6 CLB Event Window

- 30.8.2.7 CLB Signal Generator

- 30.8.2.8 CLB State Machine

- 30.8.2.9 CLB External Signal AND Gate

- 30.8.2.10 CLB Timer

- 30.8.2.11 CLB Timer Two States

- 30.8.2.12 CLB Interrupt Tag

- 30.8.2.13 CLB Output Intersect

- 30.8.2.14 CLB PUSH PULL

- 30.8.2.15 CLB Multi Tile

- 30.8.2.16 CLB Tile to Tile Delay

- 30.8.2.17 CLB Glue Logic

- 30.8.2.18 CLB based One-shot PWM

- 30.8.2.19 CLB AOC Control

- 30.8.2.20 CLB AOC Release Control

- 30.8.2.21 CLB XBARs

- 30.8.2.22 CLB AOC Control

- 30.8.2.23 CLB Serializer

- 30.8.2.24 CLB LFSR

- 30.8.2.25 CLB Lock Output Mask

- 30.8.2.26 CLB INPUT Pipeline Mode

- 30.8.2.27 CLB Clocking and PIPELINE Mode

- 30.8.2.28 CLB SPI Data Export

- 30.8.2.29 CLB SPI Data Export DMA

- 30.8.2.30 CLB Trip Zone Timestamp

- 30.8.2.31 CLB CRC

- 30.8.2.32 CLB TDM Serial Port

- 30.8.2.33 CLB LED Driver

- 30.9 CLB Registers

- 31Advanced Encryption Standard (AES) Accelerator

-

32Embedded Pattern

Generator (EPG)

- 32.1 Introduction

- 32.2 Clock Generator Modules

- 32.3 Signal Generator Module

- 32.4 EPG Peripheral Signal Mux Selection

- 32.5 Application Software Notes

- 32.6 EPG Example Use Cases

- 32.7 EPG Interrupt

- 32.8 Software

- 32.9 EPG Registers

- 33Revision History

8.1 Introduction

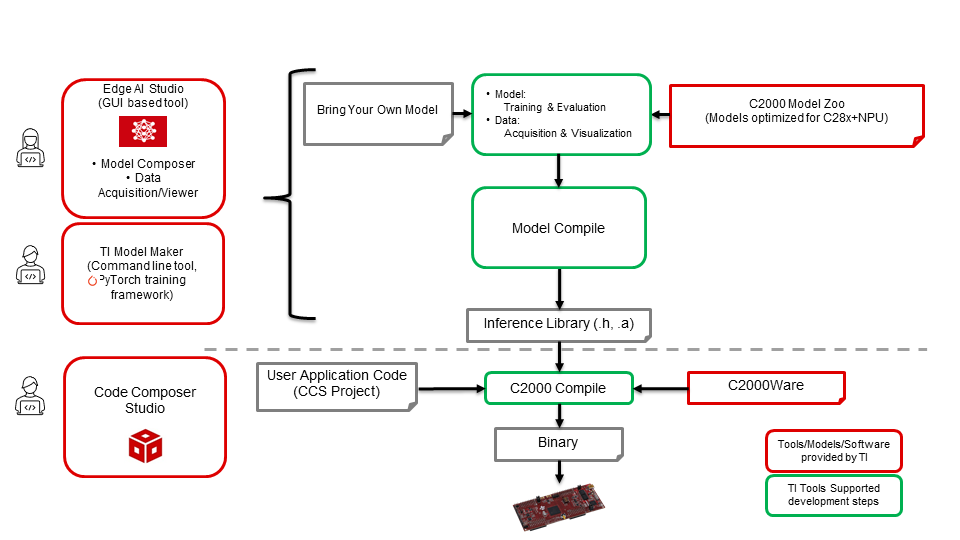

The Neural-network Processing Unit (NPU) can support intelligent inferencing running pre-trained models. Capable of 600–1200MOPS (Mega Operations Per Second) with example model support for ARC fault detection or Motor Fault detection, the NPU provides up to 10x Neural Network (NN) inferencing cycle improvement versus a software only based implementation. Load and train models with tools from TI: Model Composer GUI or TI's command-line Modelmaker tool for an advanced set of capabilities. Both of these options automatically generate source code for the C28x, eliminating the need to manually write code.

Figure 8-1shows the toolchain and steps to add NPU support to a project, starting with importing or using existing models from TI, training the models, generating the associated software libraries, and integrating into an existing Code Composer Studio™ IDE project.

Figure 8-1 NPU Development Flow

Figure 8-1 NPU Development Flow