SSZT443 july 2019 AWR2243

There is still some confusion in the industry about the different roles that three major sensor types – camera, radar and LIDAR – have in a vehicle, and how each can solve the sensing needs of advanced driver assistance systems (ADAS) and autonomous driving.

Recently, I had an interesting discussion with one of my friends, who knows I work with TI millimeter-wave (mmWave) sensors for radar in ADAS systems and autonomous vehicles (AVs).

My friend doesn’t skip an opportunity to tease me any time he reads about how an autonomous vehicle performed in different driving situations, like obstacle detection. Here’s how one conversation went:

Matt: “If that car had LIDAR, it would have easily identified the object in the middle of the lane.”

Me: “As always, I disagree.”

Matt: “What?! Why would you disagree? There was a camera sensor and a radar sensor in that vehicle, and still the ADAS system totally missed the vehicle in the middle of the lane.”

Me: “When you read about these recent events, you’ll notice that the cameras are often exposed to glare or other elements that cause the camera to miss an object in the road. Cameras are sensitive to high-contrast light and poor visibility conditions, such as rain, fog and snow. In this case, the radar sensor probably did identify the target.”

Matt: “Still, we keep running into different situations that these ADAS and AV systems seem to find very challenging. What is missing?”

Me: “It seems the ADAS’ decision-making system relied on the camera as the primary sensor to decide if the target was really there, or whether it was a false alarm.”

Matt: “So the car’s radar and camera can’t be trusted. So you are left with LIDAR as the only reliable sensor. Isn’t that right?”

Me: “Not quite. LIDAR is not as sensitive to visibility conditions as the camera is, but it is sensitive to weather conditions like fog, rain and snow. In addition, LIDAR is still considered to be very expensive, which probably limits its usage initially to higher-end level 4 and 5 autonomous vehicles.”

Matt: “So we are doomed! There is no single sensor that can make autonomous vehicles truly reliable. We will always need all three, which means very expensive autonomous vehicles.”

Me: “You are partly right. Level 4 and 5 autonomous vehicles will probably need all the three sensors – camera, LIDAR and radar – to provide high reliability and a fully autonomous driving experience.

However, for more economic vehicles requiring partial autonomy at levels 2 and 3, where high-volume mass production has already started, imaging radar using TI mmWave sensors delivers the high performance and cost-effectiveness that can enable broad adoption of ADAS functionality.”

So What Is Imaging Radar?

Figure 1 An Imaging Radar Evaluation Module with Four Cascaded TI mmWave Sensors

Figure 1 An Imaging Radar Evaluation Module with Four Cascaded TI mmWave SensorsmmWave Technology for Imaging Radar

A common use case that highlights the imaging radar sensor’s advantage is being able to identify static objects in high resolution. The typical mmWave sensor has a high velocity and range resolution, which enables it to easily identify and differentiate between moving objects, but it is quite limited when it comes to static objects.

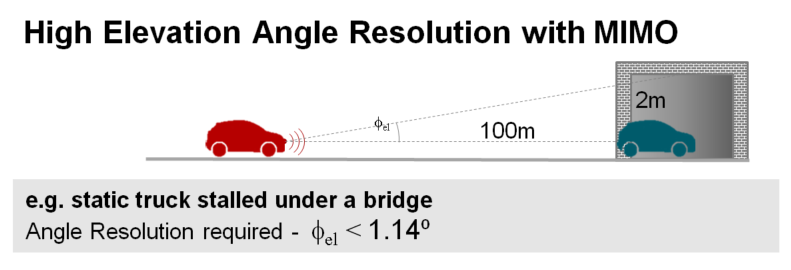

For example, in order for a sensor to “see” a stopped vehicle in the middle of the lane and distinguish it from light poles or a fence, the sensor requires a certain angle resolution in both the elevation and azimuth. .

Figure 2 shows a vehicle stuck in a tunnel with smoke coming out of it. The vehicle is approximately 100 m away and the tunnel height is 3 m.

Figure 2 The Front Radar of the

Approaching Car Needs a High-enough Angle Resolution to Differentiate between

the Tunnel and the Stopped Vehicle. mmWave Sensors Can See through Any

Visibility Conditions, like Smoke.

Figure 2 The Front Radar of the

Approaching Car Needs a High-enough Angle Resolution to Differentiate between

the Tunnel and the Stopped Vehicle. mmWave Sensors Can See through Any

Visibility Conditions, like Smoke.In order to identify the vehicle in the tunnel shown in Figure 2, the sensor needs to differentiate it from the tunnel ceiling and walls.

Achieving the scene classification requires these elevation and azimuth angle resolutions:

Where 2 m is the tunnel height minus the vehicle height, 100 m is the distance between the approaching vehicle with imaging radar and the vehicle stopped in the tunnel and 3.5 m is the distance between the stopped vehicle and the tunnel walls. Figure 3 illustrates how mmWave sensors enable high angular resolution in order to “see” the vehicle.

Figure 3 How mmWave Sensors Achieve

High Elevation Angular Resolution with Multiple-input Multiple-output (MIMO)

Radar.

Figure 3 How mmWave Sensors Achieve

High Elevation Angular Resolution with Multiple-input Multiple-output (MIMO)

Radar.Relying on other optical sensors may be challenging in certain weather and visibility conditions. Smoke, fog, bad weather, and light and dark contrasts are challenging visibility conditions that can inhibit optical passive and active sensors such as cameras and LIDAR, which may potentially fail to identify a target. TI mmWave sensors, however, maintain robust performance despite challenging weather and visibility conditions.

Currently, the only sensor that keeps robustness in every weather and visibility condition, and that can achieve 1-degree angular resolution in both azimuth and elevation (and even lower with super-resolution algorithms) is the imaging radar sensor.

Conclusion

Additional Resources

- Learn more about TI imaging radar and the AWR2243 device.

- Watch the video, “mmWave Automotive Imaging Radar System – Long Range Detection.”

- Read the blog post, “Are we there yet? Expectations vs. reality in autonomous driving.”

- Start your design with the “Automotive imaging radar reference design using the 77-GHz mmWave sensor” and the Imaging radar evaluation module.