SWRA774 may 2023 IWRL6432

5 Case-Study-2: Gesture Recognition

The high range and velocity (or Doppler) resolution possible using FMCW radars is a good fit for a gesture-based touchless interface. Potential applications include controlling house hold electronics such as TV, light or thermostat, controlling the infotainment system or kick-based trunk opening for a car.

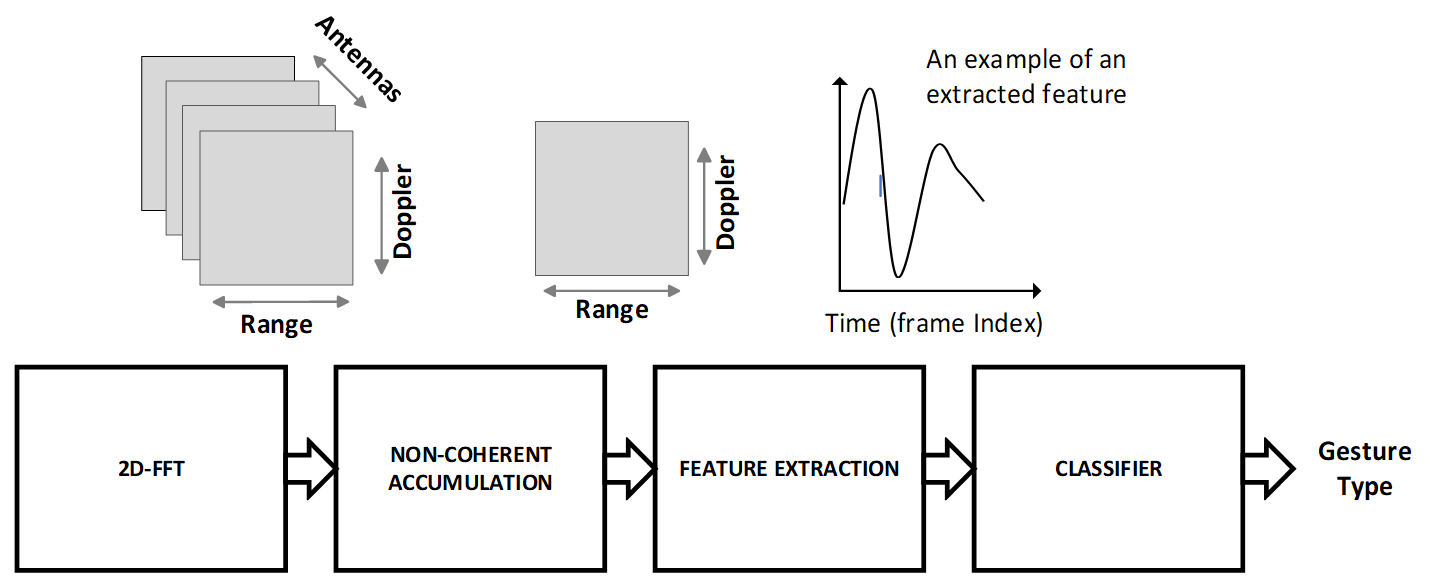

Figure 5-1 Processing Chain for Gesture

Recognition

Figure 5-1 Processing Chain for Gesture

RecognitionFigure 5-1 is a representative processing chain for a gesture-based recognition application [5]. First, the device performs a 2-D FFT on the ADC data collected across chirps in a frame. This resolves the scene in range and Doppler. The device then computes a 2-D FFT matrix for each RX antenna (or each virtual antenna if the radar is operating in MIMO mode). The non-coherent accumulation of the 2-D FFT matrix across antennas creates a range-Doppler heat map. The next step involves the extraction of multiple hand-crafted features from the range-Doppler heat map. We have identified a comprehensive set of 10 features that are useful in discriminating a variety of gestures. The hand-crafted features include basic statistics such as the average of range, Doppler, azimuth, and elevation angle, with the averages being weighted by the signal level in the corresponding range-Doppler cell. Note that the angle statistics cannot be computed solely from the range-Doppler heatmap but requires access to the complex radar cube data. Additional feature vectors are created from the correlation of the basic feature vectors (such as the correlation of Doppler and angle).

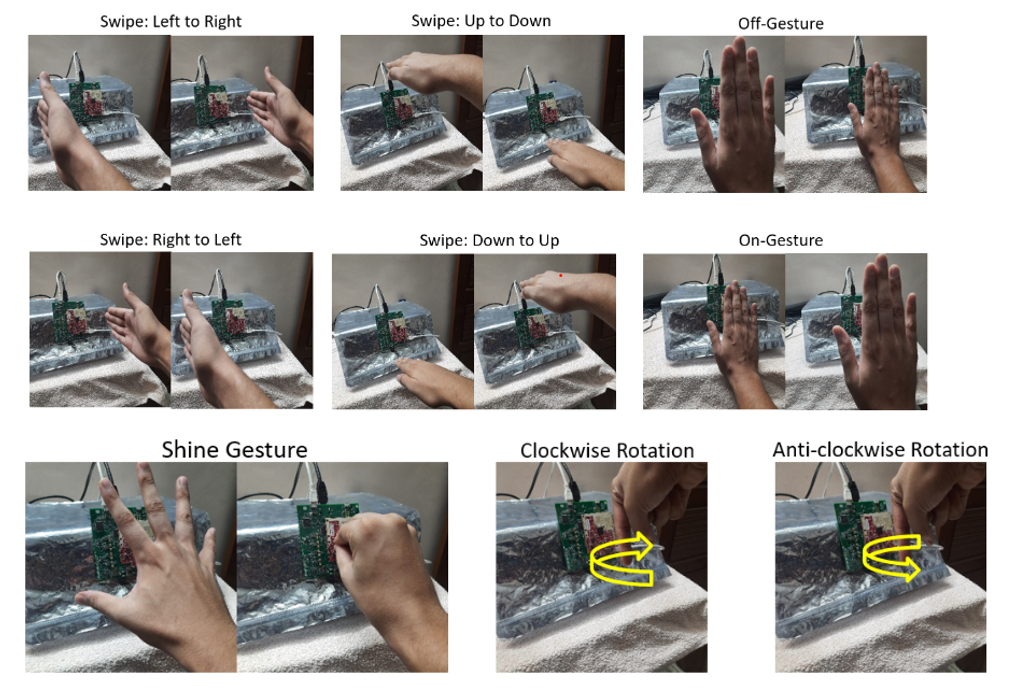

Figure 5-2 Example Gestures

Figure 5-2 Example Gestures The features extracted from the range-Doppler heatmap across a set of consecutive frames is sent as input to an Artificial Neural Networks (ANN) for the purpose of classification.

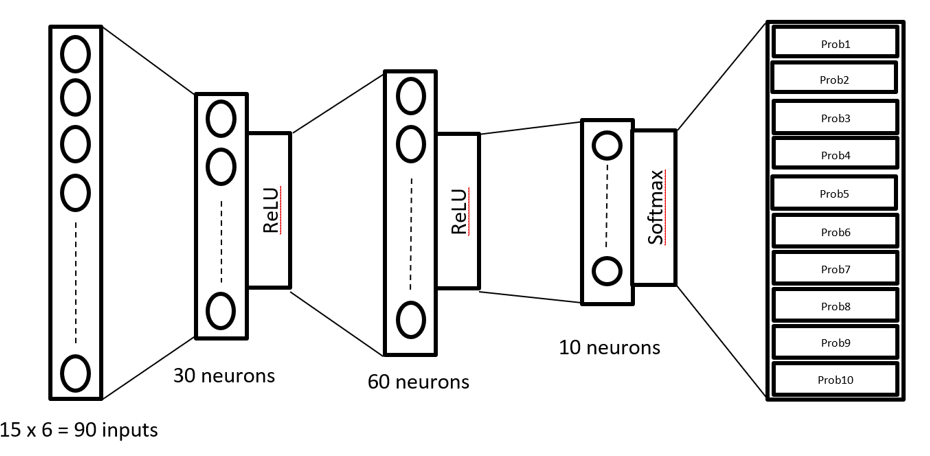

Figure 5-3 shows a representative implementation of an ANN for gesture recognition. The input to the ANN is a vector of length 60 consisting of 6 features across 15-frames. The first two layers of the ANN have 30 neurons and 60 neurons, respectively. The final softmax layer outputs 10 classes (nine classes corresponding to nine gestures shown in Figure 5-2 and one class indicating the absence of any valid gesture). This ANN was benchmarked on the M4 and found to take about 250us per invocation.

Figure 5-3 ANN Architecture for Gesture

Recognition

Figure 5-3 ANN Architecture for Gesture

RecognitionData collected from five subjects were used for testing and validation. The classifier was then tested on 10 subjects (different from the subjects in the training set). A testing accuracy of around 93% was achieved.